Counterfactual Prediction with Time-Varying Treatments + G-Computation

Here I document an algorithm known as G-computation, which is a tool for doing counterfactual prediction in time series settings.

Set-up

We assume that we have iid data strings where:

Given the iid assumption, we suppress dependence on subject index . We have a full time series, , for both and . Here we let and for .

Let be a treatment variable on date t. When treatment is on and when treatment is off. We let denote a set of confounders on date . Notice that in almost all practical settings, is actually a vector of variables, but for ease of exposition it suffices to treat it as a single variable. In the following sections, anytime we include in a regression model, just keep in mind that there are in fact multiple associated coefficients, rather than just one. Finally, is an outcome of interest.

Because we are interested in counterfactual prediction, we denote as the potential outcome when one sets . If is binary, there are such quantities, one for each possible treatment regime . In general, these quantities are unobserved, while is the observed quantity in our dataset.

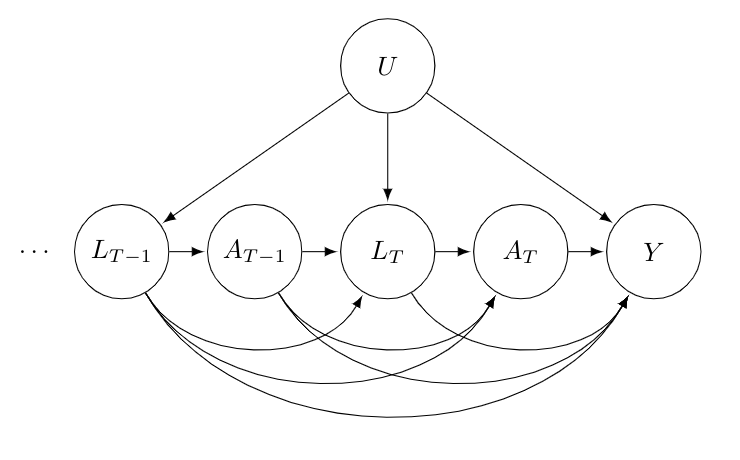

A DAG which describes the set-up above is as follows:

For simplicity, we only consider the last two periods, and , of our data string. It is reasonable to assume that all past confounders and past treatments affect all future confounders, treatment and the final outcome. Moreover, we are dealing with observational data and have tried to measure all possible confounders. However, it is likely there are some unobserved factors, , which influence the confounders, , and outcome . As a result, will arrows into , and .

Why can’t we use standard regression?

An intuitive approach would be to assume a linear model as follows:

We could fit this with OLS and set . The estimated conditional expectations come from the OLS fits for particular samples. The estimated outer expectation is an average of these fits over all the samples.

The above strategy would be valid if . This would be similar to the usual “selection on observables” assumption made in causal inference. However, the time series dependence in our set-up makes such an assumption highly unlikely.

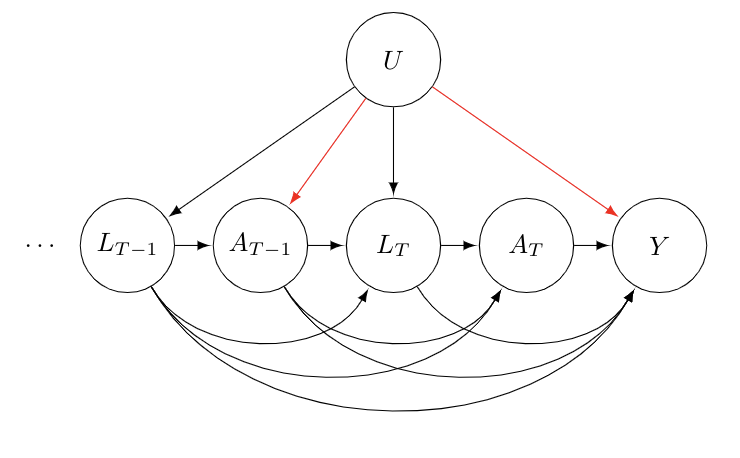

The core issue boils down to collider bias. From our DAG above, it is clear that we need to condition on . It points to both and and is thus a confounder. However, notice that both and point to . Thus, is a collider and including it in our regression induces a correlation between and . Since points to , the conditional independence statement above fails. By conditioning on , our DAG now includes the path denoted by the red arrows:

In addition to collider bias, when we condition on , we block a causal path of interest. Specifically, . Thus, it seems like we need to condition on as it is a confounder, but doing so will get us biased predictions for .

G-Computation

A solution to the above issue is G-computation. It was originally proposed in R1986 and additional details relevant to our set-up are included in RGH1999. The key is to make the following identification assumption:

Identification Assumption: For all :

That is conditional on the past history of treatments and confounders, treatment at any given time is random.

Combined with the standard causal assumptions of positivity and consistency, it is possible to estimate without bias.

Theorem: For any :

Here are the conditional densities of given the past.

Proof:

The steps here rely on repeated application of the law of total expectation and then the key identification assumption above. The second to last equality relies on consistency. The last equality relies on positivity which ensures that the integrand can be evaluated for all possible values and .

A natural estimator is to simply plugin a fitted outcome model and conditional density estimators learned from one’s data. A standard parametric approach would be to use GLMs and make a Markov assumption to limit the number of lags. Non-parametric methods ranging from kernel smoothers to modern ML methods can also be used here. Correctly specified parametric models will typically imply convergence to the truth at a -rate and normal limiting distributions. Nice convergence properties for general non-parametric methods are not as easily guaranteed, but do hold under specific smoothness assumptions. In all cases, standard errors can be evaluated via the bootstrap.

In general, when using non-linear models, the integral is intractable to compute analytically. Instead, one relies on a Monte Carlo simulation. Specifically, for , where is the number of simulation samples, one does the following:

-

For :

a. Set

b. Draw

-

Set

Then, . To get standard errors, we generate bootstrap samples and the whole algorithm is re-run starting with fitting the the outcome model and conditional density estimators.

Marginal Structural Models

An alternative to G-computation is to use marginal structural models which posit a specific parametric form for and use IPW estimators of . Non-parametric G-computation always implies a particular form of a MSM. Hence if the models are correctly specified, both techniques should provide the same answers. So, as a robustness check one should always try to fit both to make sure the results are similar. If not, one of the models is incorrectly specified. In the parametric case, this equivalence does not hold. There is an issue with parametric G-computation known as the “null paradox”: there may be no setting for one’s parametric models which accommodate the case where the outcome is conditionally dependent on past treatment but has no effect. Hence if you think you are in a regime where the treatment has no effect and non-parametric models seem infeasible (i.e. you have a small or very noisy sample), you should use MSMs. Finally, there are doubly-robust MSM approaches which may be useful in case you are worried about model specification or think you can model propensity scores well.