Deep Learning Basics

I plan on putting together some personal applied deep learning notes. They will pull together:

- More practical advice from these two textbooks, ZLLS2021 and GBC2016

- Other insights from my courses, notes available online and papers

The following post will start with DL basics.

Automatic Differentiation

Autodiff is a general framework to compute derivatives, not just of mathematical functions, but general programs with control flow. Standard techniques for evaluating derivatives have disadvantages:

- Manual calculus: not scalable, painful for complex functions

- Symbolic (à la Maple): expressions explode, only applicable to closed form functions

- Numerical: For a function need to run approximation times, unstable, depends on choice of when computing

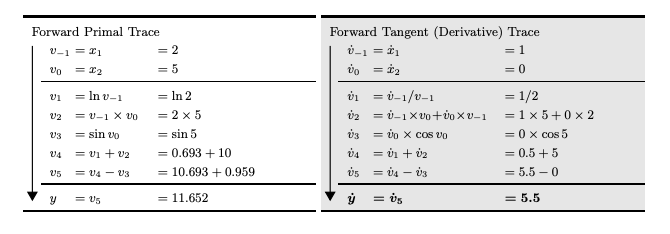

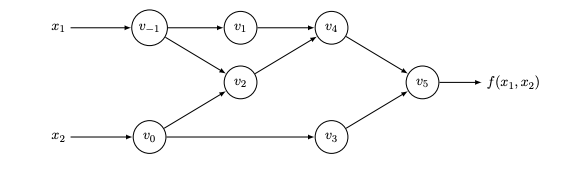

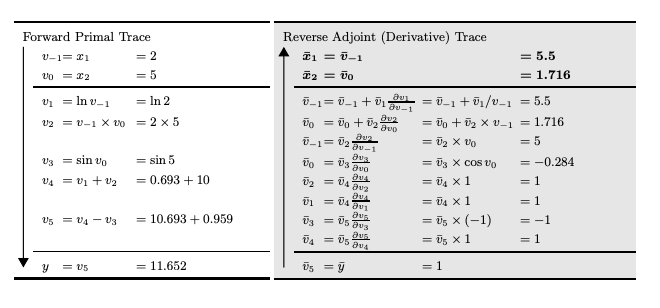

Autodiff is a symbolic/numerical hybrid. The core idea is that all algorithms can be written as a composition of simple functions with known derivatives. This is called the trace and can be represented as a DAG. Consider the example from BPRS2015 where . To evaluate derivatives, we do the following:

- Break down into its trace: , ,

- Compute symbolic derivatives for each op in the trace: , ,

- Apply the chain rule: Recurse through the trace and numerically compute the exact derivatives

Any control flow code is just eliminated: branches are taken, loops unrolled, etc. This leaves us with a linear execution trace. The above formulation is known as forward mode autodiff. It is efficient for functions where . It will require just passes to compute the Jacobian. It provides matrix-free way of for evaluating Jacobian-vector products. Specifically:

can be computed in a single forward pass by initializing . For functions where , like neural nets, we use reverse mode autodiff. Here the derivatives are propogated back from a given output. Again, consider our example and let . If we define , then we see the total derivative with respect to is:

We can compute such values in a two step procedure. First, run a forward pass to compute the intermediate values of and track the dependencies in the DAG. Second, propogate the derivatives backward from outputs to inputs.

This process will require passes to compute the Jacobian of . Reverse mode provides a matrix-free way of computing transposed Jacobian-vector products. Specifically:

can be computed in a single forward + backward pass by initializing . Note that when , that means we can get the gradient in a single pass.

The Python package autograd wraps numpy ops to support autodiff. Reverse mode autodiff is also what is going on when you do x.backward() in PyTorch.

Backpropagation

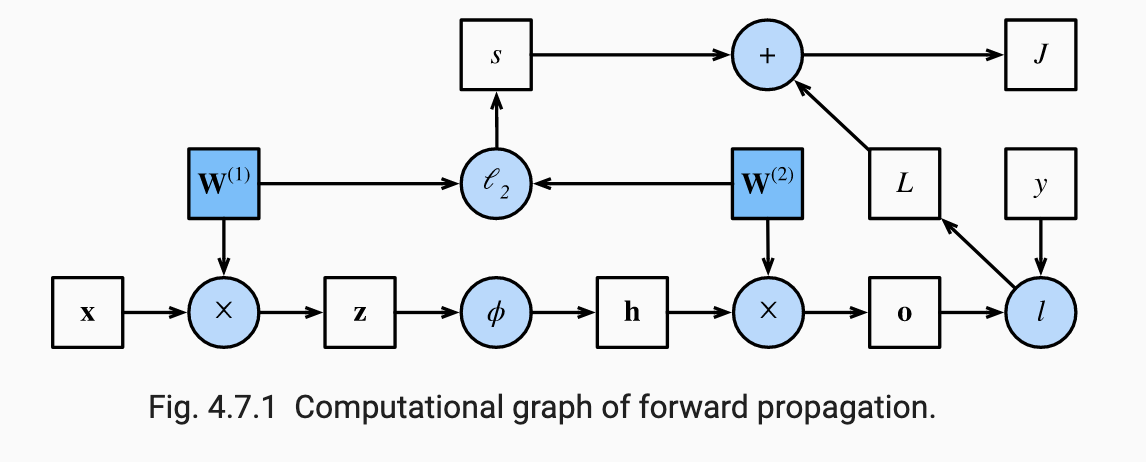

As an example of how NNs use reverse autodiff, consider a one-hidden layer MLP with weight regularization. For simplicity, assume there are no bias terms, so all the trainable parameters are where and .

First, in the forward pass we take an input example and compute the following:

The objective function for that example is computed as:

where might be cross-entropy loss and . The following image from ZLLS2021 shows the DAG associated with this NN:

Second, in the backward pass, we start with the following:

Then, we compute the gradient of the with respect to outputs :

Next, we compute the gradient of the regularization with respect to :

Then, we can compute the gradient for :

For the gradient of , we need to continue:

where is the element wise multiplication operator. Finally, we get:

The above steps are computationally efficient compared to forward mode autodiff in two ways:

- First, when computing we were able to avoid duplicate re-computations of the component of the gradient associated with the hidden layer and beyond. Thinking about a NN as a graph, forward mode is while reverse mode is .

- Second, note that was a vector in . Computing only required matrix-vector products. Forward mode tracks how changes in pass through to and would require matrix-matrix products since is in between. So, reverse mode takes advantage of the fact that we only care about how weight changes affect the final loss to reduce the number of matrix-matrix computations.

The downside of reverse mode autodiff is memory complexity. It requires storage of all intermediate quantities from the forward pass to compute gradients. Memory requirements scale proportionally to the number of layers and batch size. So, training deep networks with large batches can lead to out of memory errors. That is with backward mode we pay is memory cost.

Softmax Operation

For classification, we need to transform real-valued logits to a discrete probability distribution . The softmax operation does this transformation in a way that makes it differentiable, ensures and . Specifically, we have:

Cross-Entropy Loss

Say we have classes. Minimizing cross-entropy loss is equivalent to minimizing the negative log likelihood of our data where we assume that . We have:

Note that if ; that is we predict class with perfect certainty. Else, . We have:

The derivative with respect to a logit becomes:

This result is similar to OLS where the gradients of square loss are of the form . The gradients of any EFM model are of this form, making them trivial to compute. All of this math holds even when our label is not a binary vector but a distribution of the form . In that case, we can think of cross-entropy in information-theoretic terms. We are minimizing:

with if and otherwise.

Handling Numerical Issues with Softmax

If some of the logits are very large, then might be larger than the largest number than can be handled by certain data types. A simple solution is to subtract from all the logits. This does not change the softmax outputs:

Now we face another issue: might be very negative and might be close to 0. Due to finite precision, these values may be rounded to 0. And then we will have . However, recall that ultimately we plug in the softmax outputs into cross-entropy loss where we take a log. Hence we get:

Hence we can avoid computing by directly computing in the cross-entropy loss function. Finally, we can take advantage of the LogSumExp trick:

These tricks are implemented in torch.nn.CrossEntropyLoss().

Multilayer Perceptrons

A -layer MLP is given by:

where are activation functions which usually operate element or row wise and and are the trainable model weights and biases. Note that it is key that are non-linear. Otherwise, we are just building a a single-layer linear model with more parameters than necessary.

MLPs are known as universal approximators. Several different statements exist formalizing this intuition. One is that three-layer MLPs can approximate any -Lipschitz function with neurons and ReLU activations such that error is bounded by :

Finding such a in a computationally efficient manner is the hard part. Also, not the exponential dependence on dimension here.

Activation Functions

-

ReLU: . ReLU is common because the derivatives are well-behaved. They vanish when or equal one when . This makes optimization easier and mitigates issues with vanishing gradients.

-

Parametrized ReLU: . Some information gets through even when .

-

Sigmoid: . Maps inputs to . We have . Hence as or . Small gradients for very small or very large can lead to slow updated during GD.

-

Tanh: . Maps inputs to and exhibits point symmetry around the origin. We have . Hence as or . Sometimes preferable to sigmoid for hidden layers because mean of output tends to be close to 0, centering the data for future layers.

Regularization

Weight Decay

Similar to high-dimensional linear regression, a common strategy to regularize is to add or penalties on the weight matrices to force the model to fit smoother functions. Whether the bias terms are penalized varies across applications, but usually it is not penalized in the output layer. For simplicity, assume all the weight matrices are vectorized are represented by parameter . The new regularized loss is of the form:

The GD steps look like:

So, the weights are shrunk by a constant factor each step before perfoming the standard GD update. We can also study what happens over the course of the full training procedure. Let , the unregularized loss. Then, by a second order Taylor approximation around :

where is the hessian of at . Since is the minimizer, it is PSD. Note part of the first order term disappears because . The minimum of occurs when :

Note as , we have . Else taking the eigendecomposition :

This is exactly the same analysis as ridge regression. We see that weight decay aligns the components of that are aligned with the -th eigenvector of are scaled by where are the eigenvalues. Directions in the parameter space which do not contribute much to reducing and hence have small eigenvalues are significantly shrunk by weight decay.

We can similarly do regularization. Then, we have:

The GD updates look like:

where is applied component-wise. If we assume the Hessian is diagonal:

The component-wise solution is given by:

Again, this is exactly the same as the Lasso analysis. The thresholding operation will encourage shrinkage plus sparsity.

In PyTorch, penalization is automatically supported with by adding weight_decay=lambda as an argument to torch.optim.SGD(). You have to define a custom loss functionf or penalization.

Early Stopping

Early stopping refers to terminating training when you start to see validation error trend upward over some number of past epochs. You return model parameters from the last kink you saw in the risk curve. Such a strategy can be viewed as an efficient algorithm for hyperparameter search. The hyperparameter here is the number of training steps. Unlike grid search, a single training run automatically evaluates the loss for several values of this hyperparameter. Also, it is completely unobtrusive, not changing the loss function and learning dynamics. The extra cost comes from evaluating on the validation set in each step and having to store the optimal model parameters.

Say we restrict the number of optimization steps to and assume that the gradient of the loss is bounded by . Then, early stopping restricts where is the initialization. For more intuition, lets analyze a linear model with square loss and optimal . We will show that early stopping is equal to regularization. We have:

The GD iterates:

Using the eigendecomposition :

If is chosen such that and , then we have:

Recall from Equation , we have for the regularization:

The equality here follows from the Woodbury matrix identity. Compared to Equation , they are equivalent when , and are chosen such that:

If the eigenvalues are sufficiently small, one can show that this equivalence corresponds to . That is the number of training iterations is inversely proportional to the parameter . In context of early stopping, we see that parameter directions with signifcant curvature learn early compared to directions of less curvature.

Dataset Augmentation

More training samples can improve generalization. If we do not have that, we can create fake data and add it to the training data. In computer vision, add samples with rotations or where a few pixels have been translated can force the NN to be invariant to these transformations. Make sure to not rotate a “9” to a “6” if you are asking your model to classify digits. Adding noise to your inputs can be shown in many cases to be equivalent to adding a weight norm penalty. For a proof, look at B1995.

Adding Noise to Weights

We can also add noise to the parameters of a model. Say for each input sample, we include a pertubation on the network weights. If we are using squared loss, we want to minimize the risk:

For sufficiently small , this can be shown to be the same as minimizing the unperturbated risk with a regularization term . So, the solution is pushed to regions in the parameter space where perturbations in the weights have a small effect on the predictions. We find minima surrounded by flat regions. Note that this only works for non-linear models. In linear regression, the regularization term becomes , which does not depend on parameters .

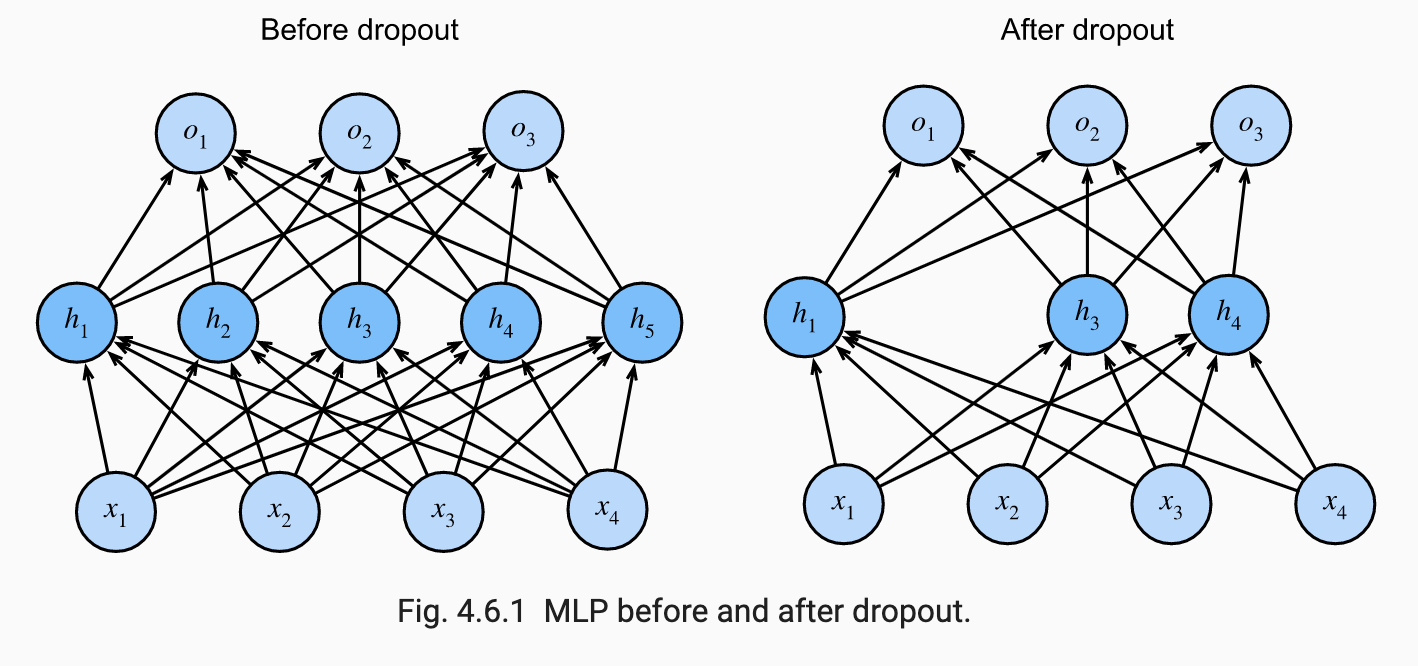

Dropout

Dropout is a technique developed in SKHSS2014. The idea is to inject noise to the hidden units of a NN. Throughout the training process, during forward propogation, some fraction of neurons in a layer are randomly zeroed out before computing the subsequent layer. The original authors proposed a slightly different interpretation. They argued that overfitting in NNs is characterized by co-adaptation where each layer relies on a specific pattern activations in the previous layer to fit the data well. Dropout forces neurons to learn more robust features since they cannot rely on other units to correct its mistakes.

The image below from ZLLS2021 shows how dropout amounts to training a smaller sub-network of the original NN:

This provides an interpretation of dropout as an approximation to bagging. Specifically, consider the exponential number of sub-networks that can be formed by masking certain neurons in a NN. In dropout, for each training example in a mini batch, a different sub-network is sampled and then we run the forward pass, backprop and parameter update. If corresponds to the mask vector, dropout training can be viewed as minimizing . We can get an unbiased estimate the gradient of this function by sampling values of . Unlike bagging, the base learners share their parameters and are not trained to convergence. Parameter sharing makes it possible to “ensemble” exponentially many sub-networks in a memory-efficient manner. Much like bagging, each sub-network sees a subset of the original training examples sampled with replacement due to minibatches.

The noise added during dropout should be added in an unbiased manner. The expected value of a layer holding all other layers fixed should be equal its value in the absence of noise. Hence an activation function is replaced with random variable :

where is the dropout probability. Notice that . Since dropout is disabled at test time, this scaling ensures the expected total input to a neuron at test time is the same as the expected total input to a neuron at train time.

In practice, implementing dropout during training is as simple as drawing random uniforms and multiplying a hidden layer by mask to convert . Usually, the input layer has because we do not want to turn off too many of the input features.

Adding dropout in PyTorch is as easy as using the torch.nn.Dropout class in your NN specification.

Vanishing and Exploding Gradients

Consider a NN with layers, input and output where each layer is a transformation parameterized by matrix . Let , then we have:

We can write the gradient with respect to as follows:

The gradient is the product of matrices and a vector. Depending on the eigenspectrum of these submatrices the gradient can end up being very small or very large. For simplicity, assume that and has eigendecomposition . Then, we have:

Hence, for deep NNs, if the eigenvalues are larger than in magnitude, the gradient explodes. If the eigenvalues are smaller than is magnitude, the gradient vanishes. Exploding gradients make learning unstable. Vanishing gradients make it impossible to figure out where to move in parameter space. This problem is pronounced in RNNs where we are using the same weight matrix over and over again in many layers, but can also show up elsewhere.

One source of vanishing gradients is the choice of activation function. For example, consider the sigmoid activation. Since when and , if you have small weights multiplied by these activation gradients, the loss gradient can approach zero at rate exponential in the number of layers during backprop. Early layers train very slowly. ReLU solves this problem.

Exploding gradients are driven by large weights. It is more of a problem with ReLUs compared to squashing functions like the sigmoid and tanh. But for all activations, sufficiently large weights can cause the loss gradient to explode at an exponential rate. As an example, consider the following 3-layer NN where each layer has a single neuron:

Then the gradient with respect to the first weight is:

Note that . But for a large number of layers the term can blow up when is large and . Similar behavior is possible for the sigmoid activation.

Weight Initialization

Recall that weight initialization is not an issue for convex problems such as linear or softmax regression since the appropriate optimization algorithms are guaranteed to reach a global minimum. For non-convex problems, initialization affects the solution to which you converge and the numerical stability of the algorithm.

Breaking Symmetry

Fully connected NNs are symmetric in their parametrization. Imagine a simple MLP with one hidden layer and two units. We can permute the weights in and and obtain the exact same function. Hence there is permutation symmetry among the hidden units of each layer.

Such symmetry can become a problem during training. If the hidden units of a layer share the same input/output weights and activations, they compute the same values and receive the same gradient during backprop. The GD updates for each unit’s weights will the same. So, the hidden layer would behave as a single unit, wasting the NN’s capacity.

To break symmetry, we need to add specific forms of randomness to the training process. For a single hidden layer MLP, we will see the undesired behavior if we initialize for some constant . Randomly initializing the elements in to small values from a Gaussian or uniform is the standard strategy. Technically, one can continue to initialize the weights into the output units, . The output units receive different gradients during backprop based on the functional form of the loss. But if you start with , it will take two iterations to make and finally start updating .

Other strategies can work even with constant hidden unit weights. For example, dropout would break the symmetry, but note minibatch SGD is not sufficient. Alternatively, one can set random weights to 0 and random biases are sufficient. But in that case, a -layer NN will take iterations to get non-zero weights in all layers.

Xavier Initialization

A commonly used initialization is the Xavier initialization from GB2010 which helps mitigate issues with vanishing/exploding gradients. The idea is that with randomly initialized weights, the variance of a unit’s output scales with the number of inputs. Consider an output from a fully-connected layer without non-linearities. Let there be inputs and let be the associated weights. We have:

Assume that , , and are independent of . Then, and . One way to keep the variance fixed is to set . Considering backpropogation, one can show that the variance of the gradient blows up unless . Since you cannot simultaneously satisfy the conditions, the Xavier initialization instead takes . That is:

Typically, this variance is used with a Gaussian. Instead, noting that the variance of a , one can sample from:

Handling Distribution Shift

Say we train our model on data from distribution , but our test set is . Without any assumptions on the relationship between and , we cannot do anything. There are certain restricted assumptions we can make and still make things work:

- Covariate shift: , but . Think “ causes ”.

- Label shift: but . Think “ causes ”. For example, outcome is a disease and covariates are symptoms. In some degenerate cases, we can have label and covariate shift simultaneously. For example, if is a point mass, i.e. the label is deterministic. It is better to use label shift methods in this case, since they are designed for low-dimensional objects, outcomes, as opposed to high-dimensional objects, covariates.

- Concept shift: Definition of labels changes over time

Say our train distribution is and our test distribution is . First, consider covariate shift where we have . Hence:

That is we need to re-weigh samples by propensity scores:

To use this in ERM, we need estimates . Any approach will require samples from both and , but only the covariates, not the labels. The simplest approach is to build a classifier which distinguishes samples from the train and test. Let mean that a sample comes from . Assume for simplicity . Then, using logistic regression, we have:

The final algorithm is to solve a weighted ERM problem with these estimated importance weights. Usually, we clip the weights at some constant to lower variance when the denominator is very small. Theoretically, we need a positivity assumption. That is whenever , we need .

For label shift, shift a similar analysis yields that we need to estimate:

Assume we are dealing with a -class classification problem. Label shift tends to be easier to handle statistically because if we have a good classifier on the train distribution, then we do not have to deal with potentially high-dimensional covariates . What we do is estimate a confusion matrix with predictions on a validation set (constructed via sample splitting). The columns represent the ground truth and rows are predicted categories. We do not have access to test labels, but we do have access to test covariates. So, we can also get the distribution over predicted categories in the test set, . Then:

This result follows from the fact that on the test samples since only depends on through and we make the label shift assumption. Hence we have:

Assuming a sufficiently accurate classifier, is invertible and we can estimate . Getting an estimate is simple from our labeled train samples. Finally, we plug in into a weighted ERM.

For concept shift, we usually assume that definitions are changing slowly over time. So, we simply collect more samples over time and continue performing parameter updates to our existing NN.